Integrating Actions on Google with Home Assistant

Creating custom apps for Google Assistant

This week I went to Google Cloud's annual Next conference in London. One of the most interesting sessions from the 2 days was titled "Intro to Building for Google Home and Google Assistant with Serverless". After seeing how easy it was to build a custom app that can be used on Google Assistant powered devices such as phones and Google Homes, I wanted to get started and do something myself.

In no time at all I had a custom assistant app that allowed me to ask my Google Home for information about my house, and the integrations within Home Assistant. For example, "Ok Google...":

- "Ask Edson Home what the temperature is inside the house?"

- "Ask Edson Home what lights have been left on?"

- "How long until pay day?"

- "Watch Sky one"

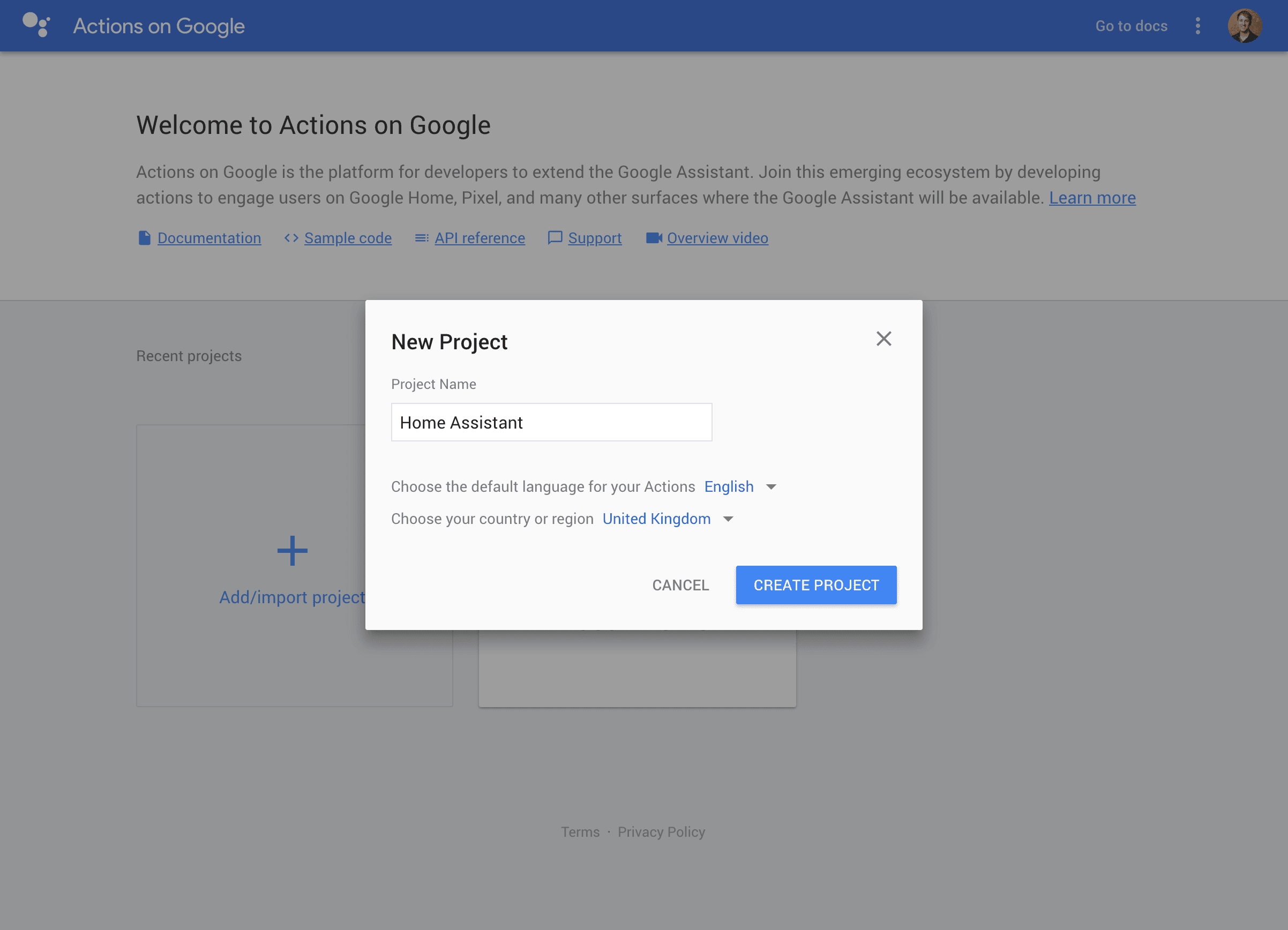

To get started and integrate this, head to https://console.actions.google.com and create a new project:

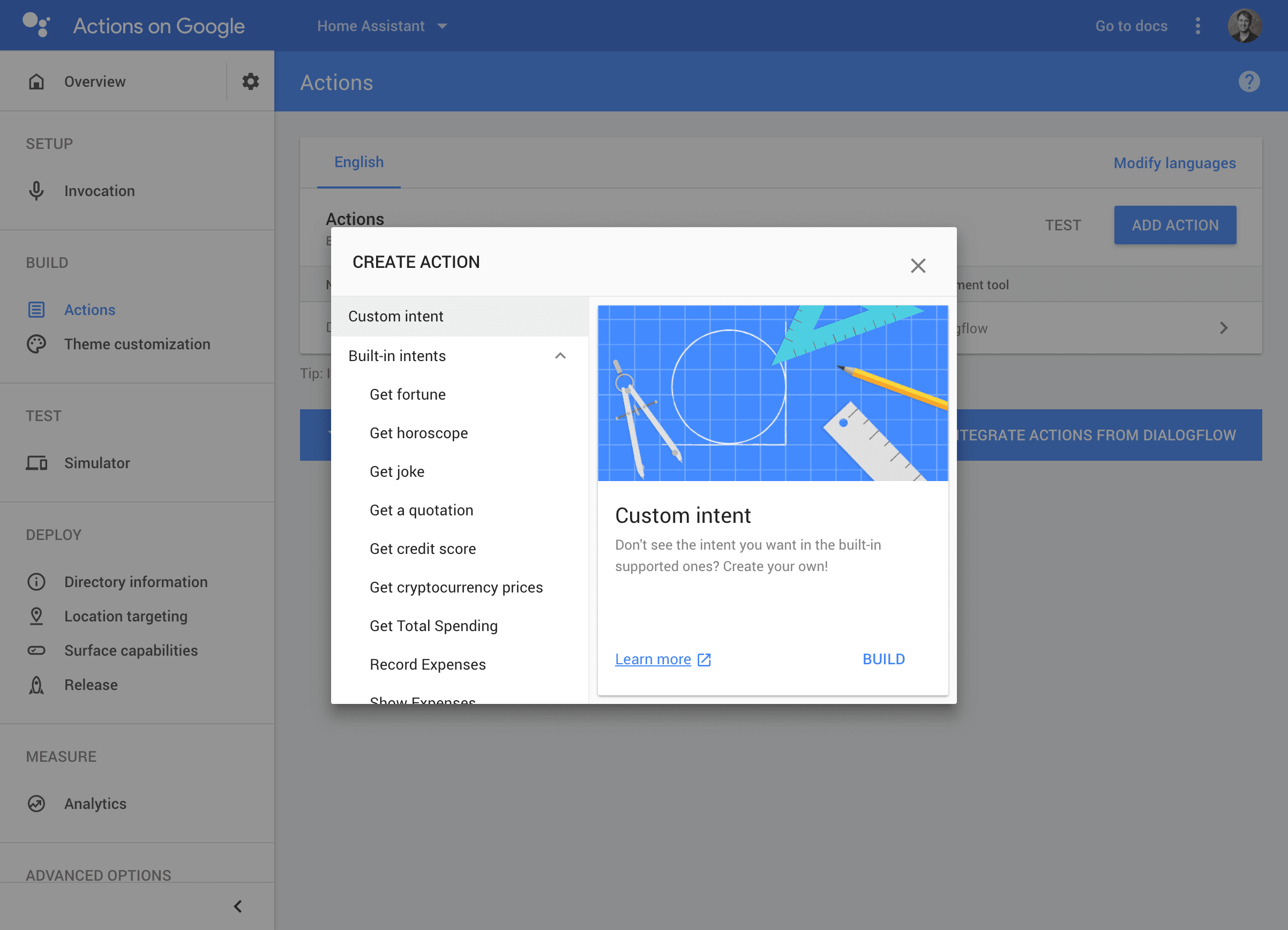

From there, in the left menu, select Actions -> Add Action -> Custom intent -> Build. This will take you to the Dialogflow service. The first thing we need to do is let Dialogflow talk to our Home Assistant instance. Home Assistant already provides a Dialogflow component which means that custom integrations can be done in minutes.

In the sidebar on the Dialogflow service, under the Fulfillment item, enable the webhook option and set the URL as https://{YOUR_HOME_ASSISTANT_URL}/api/dialogflow?api_password={YOUR_HOME_ASSISTANT_PASSWORD}, replacing the tags with your home assistant url and password.

In your home assistant configuration.yaml add the following line:

dialogflow:this will enable the dialogflow api endpoint specified above.

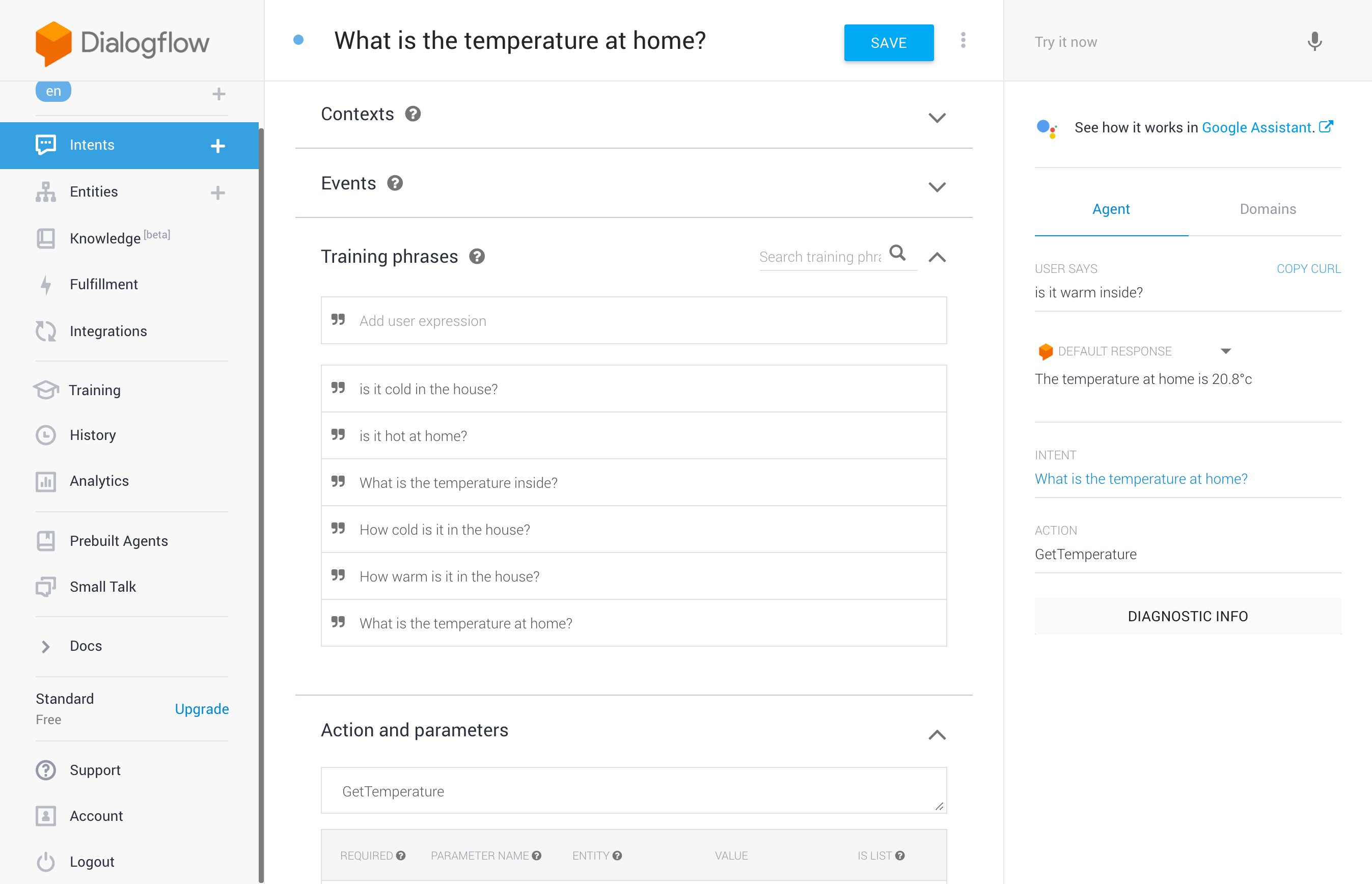

Next, we need to create something called an "Intent". Intents are things that take a phrase, for example "What is the temperature at home?" and work out what the user means by this, resolving it to an action, e.g. GetTemperature. Dialogflow uses machine learning to take a list of training phrases, such as:

- "is it cold in the house?"

- "is it hot at home?"

- "What is the temperature inside?"

- "What is the temperature at home?"

and builds a learning model that can be used to detect other phrases that the user might ask. For example, after using the 4 training phrases above, a user could ask

"is it warm inside?"

and the model will still understand this and be able to associate it to this intent. This works even though that phrase wasn't explicity added. Compare this to the Google Assistant IFTTT integration, where the user must exactly match the trigger phrase to perform an action.

Next, we give this intent an action name, in this case GetTemperature, (this can be called anything you want). Finally, at the bottom of the page, enable the "webhook call for this intent" option. This tells Dialogflow to use our Home Assistant instance to get the response for this action, rather than the preset responses that can be configured above.

Finally, back in the Home Assistant config, add an intent_script section that defines what should happen for each intent.

intent_script:

GetTemperature:

speech:

text: The temperature at home is {{ state_attr('climate.heating', 'current_temperature') }}°cIn this example, the assistant will respond with a message answering the question of how warm it is inside the house. Home Assistant also lets you perform actions with the following syntax:

intent_script:

TurnLoungeLightsOn:

speech:

text: Turning lounge lights on

action:

- service: light.turn_on

data:

entity_id: light.loungeThis is obviously a basic example which you wouldn't add yourself, since Google Assistant can already perform this action anyway.

The video from the session can be watched below (or viewed at https://www.youtube.com/watch?v=hWZxrTTmqcM).